Intellij, Junie + Ollama

Introduction

- Brief overview of IntelliJ IDE and its AI Assistant, Junie

- Importance of AI-powered coding assistance for developers

- Challenges with IntelliJ’s AI Assistant credit limitations

- Purpose of the article: Guide to integrating Ollama with Qwen 2.5 Coder 8B model for a cost-effective, powerful local AI solution

Assumption: The article proceeds with the assumption that readers are aware of

- Jetbrains IDE

- How AI agents help with coding/development

- AI assistant and Junie official plugins from Jetbrains which act as AI agents in their IDE's

Why Use Ollama with IntelliJ’s AI Assistant?

- The Problem with Credits: Limited credits in IntelliJ’s AI Assistant, even with paid plans cause a restriction for power users in having a peace of mind while working with AI

- Privacy and Control: Benefits of running AI models locally (data privacy, no internet dependency). At my firm, we try to avoid pushing queries to remote llm services just as part of security.

- Cost Efficiency: Open-source models like Qwen 2.5 Coder 8B via Ollama eliminate recurring costs

- Performance: Qwen 2.5 Coder 8B’s capabilities for coding tasks (e.g., autocompletion, code explanation, generation)

Ollama + Qwen 2.5 Coder on Google Collab

If running Ollama and the model on your local machine seems too daunting on work and on your machine resources, an alternative is to make use of the excellent computing resources that Google Collab provides for research purposes.

💡

Google Collab resources are meant for research purposes. Please be judicious in using the resources and shutdown your instances when not in use. Starting up an instance takes 2 clicks and 1 minute. So, please shutdown if you know you wont need it for the next 30 minutes..

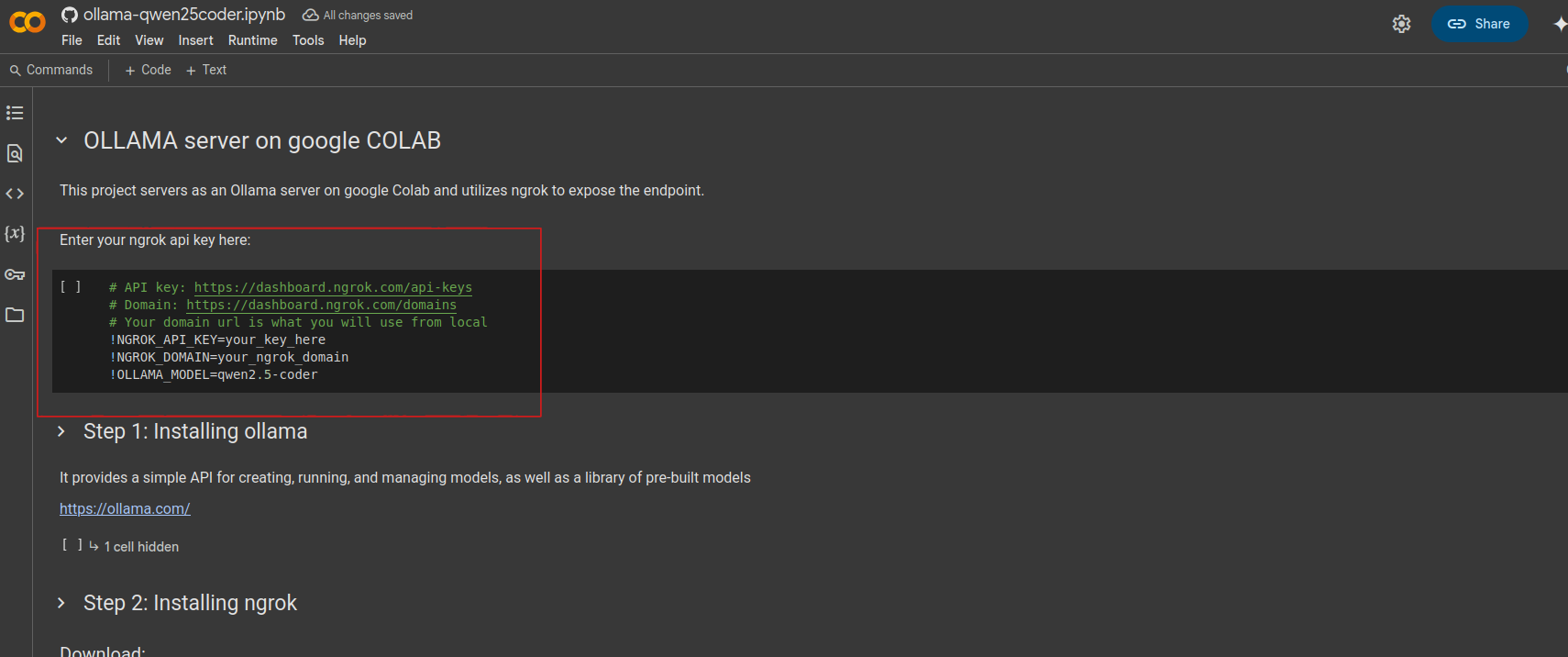

- This is my github gist: https://gist.github.com/sudhirnakka-dev/9d883531d793e8a6c5e62b39ed9712ac

- Open it in your google colab (Please go through the script to understand what it does - you should always read through public github gists!)

- Customize your ngrok api key and domain url. Run the collab notebook (runtime > run all)

- Once the execution is done, your domain url or endpoint from ngrok dashboard is what you will use in Intellij

Setting Up Ollama Locally with Qwen 2.5 Coder 8B (Involved but local)

Prerequisites:

- System requirements (e.g., 16GB+ RAM, i7 or comparable CPU, GPU optional but recommended)

- Software dependencies (e.g., Docker)

Docker guide:

- Follow the official documentation here: https://hub.docker.com/r/ollama/

- For CPU only:

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

- For GPU:

docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

- For advanced configuration and nvidia/amd gpu configurations follow the official ollama link posted above.

- Once ollama docker container is up and running, execute the following to download and serve qwen 2.5 coder model

docker exec -it ollama ollama run qwen-2.5-coder

- Troubleshooting Common Issues

- Address potential problems (e.g., insufficient VRAM, port conflicts)

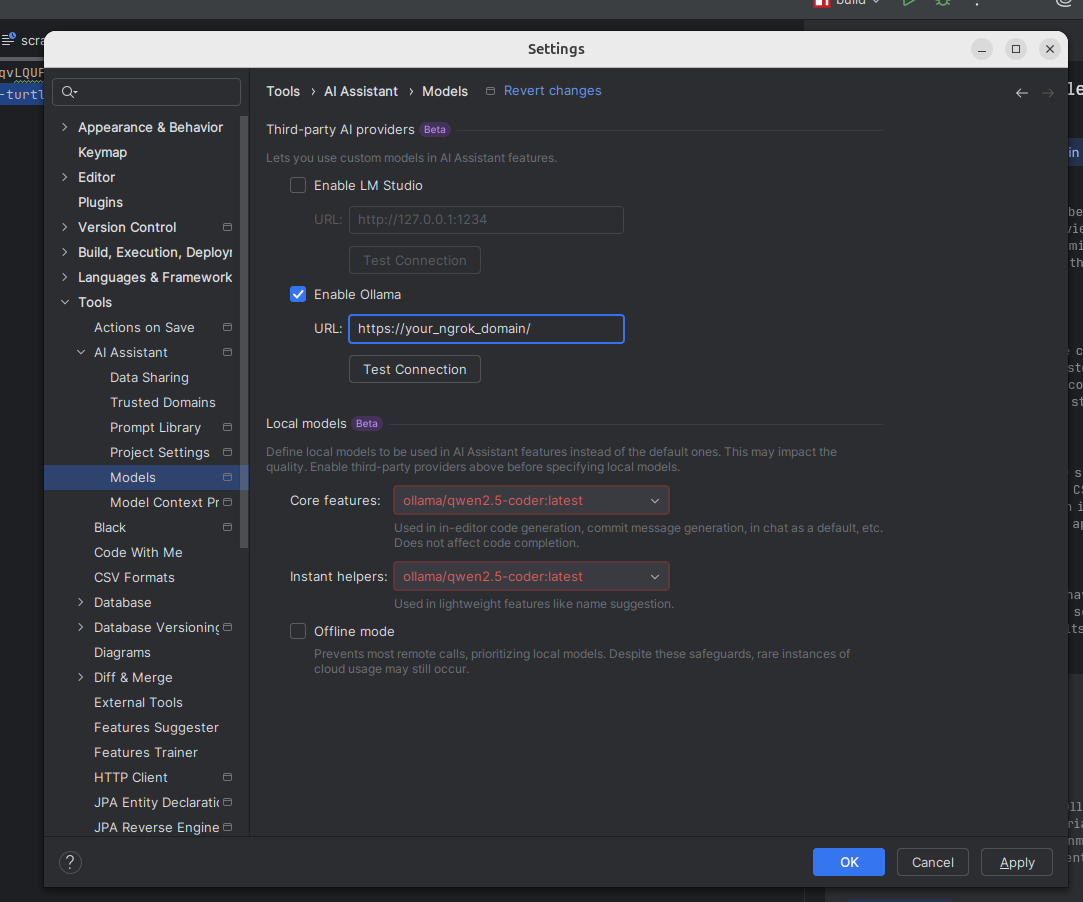

Configuring IntelliJ AI Assistant with Ollama

Similar to how ngrok domain url was configured in Intellij, follow the steps:

- Accessing AI Assistant Settings

- Navigate to IntelliJ IDEA settings/preferences

- Locate the AI Assistant configuration panel

- Enabling Ollama Integration

- Enable Ollama support in AI Assistant settings

- Specify the local Ollama server URL (e.g.,

http://localhost:11434) - Select the Qwen 2.5 Coder 8B model

- Customizing AI Behavior

- Adjust context settings for codebase awareness

- Configure prompts for specific tasks (e.g., code completion, debugging)

- Testing the Integration

- Run sample queries to verify Junie’s responses using the Qwen 2.5 model

- Example: Autocomplete a function or explain a code snippet

Saving Credits

- To have a peace of mind, you can put Intellij AI in offline mode

- This will restrict its communication with AI/Junie servers

Conclusion

- Recap of benefits: Unlimited AI usage, privacy, and cost savings

- Encouragement to experiment with local and Colab setups

- Call to action: Share feedback or explore other Ollama models for specific use cases

References

- Official Ollama documentation

- JetBrains AI Assistant documentation

- Qwen 2.5 Coder model details from Alibaba Cloud

- Google Colab setup guides for running LLMs